Snowplow Web Package

The package source code can be found in the snowplow/dbt-snowplow-web repo, and the docs for the model design here.

The package contains a fully incremental model that transforms raw web event data generated by the Snowplow JavaScript tracker into a series of derived tables of varying levels of aggregation.

The Snowplow web data model aggregates Snowplow's out of the box page view and page ping events to create a set of derived tables - page views, sessions and users - that contain many useful dimensions as well as calculated measures such as time engaged and scroll depth.

Overview

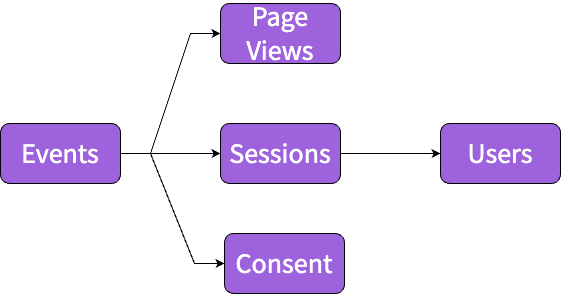

This model consists of a series of modules, each producing a table which serves as the input to the next module. The 'standard' modules are:

- Base: Performs the incremental logic, outputting the table

snowplow_web_base_events_this_runwhich contains a de-duped data set of all events required for the current run of the model. - Page Views: Aggregates event level data to a page view level,

page_view_id, outputting the tablesnowplow_web_page_views. - Sessions: Aggregates page view level data to a session level,

domain_sessionid, outputting the tablesnowplow_web_sessions. - Users: Aggregates session level data to a users level,

domain_userid, outputting the tablesnowplow_web_users. - User Mapping: Provides a mapping between user identifiers,

domain_useridanduser_id, outputting the tablesnowplow_web_user_mapping. This can be used for session stitching.

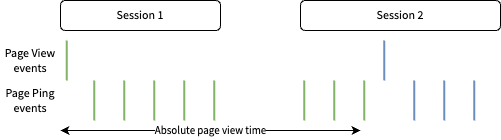

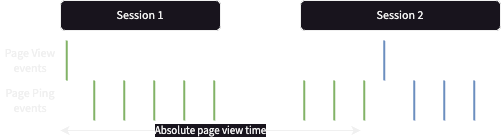

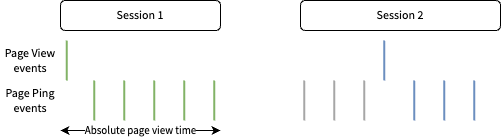

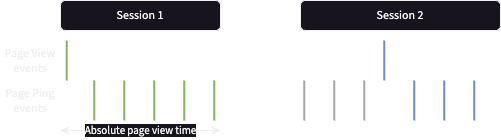

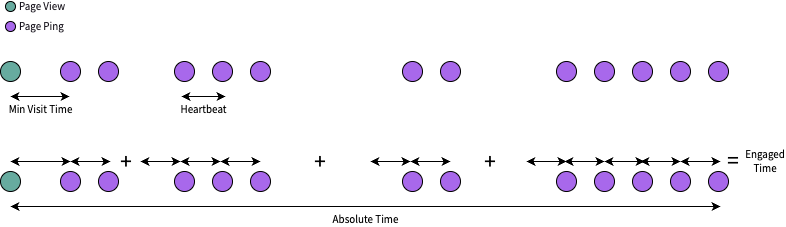

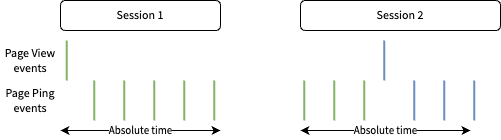

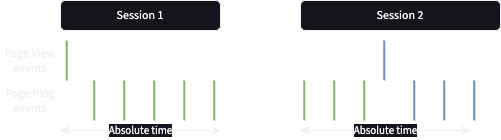

Engaged vs. Absolute Time

At a page view- and session-level we provide two measures of time; absolute, how long a user had the page open, and engaged, how much of that time the user was on the page. Engaged time is often a large predictor of a customer conversion, such as a purchase or a sign-up, whatever that may be in your domain.

Calculating absolute time is simple, it's the difference between the derived_tstamp of the first and last (page view or page ping) events within that page view/session.

The calculation for engaged time is more complicated, it is derived based on page pings which means if the user isn't active on your content, the engaged time does not increase. Let's consider a single page view example of reading an article; partway through the reader may see something they don't understand, so they open a new tab and look this up. They might stumble upon a Wikipedia page on it, they go down a rabbit hole and 10 minutes later they make it back to your site to finish the article. In this case there will be a gap for those 10 minutes in the page pings in the events data.

To adjust for these gaps we calculate engaged time as the time to trigger each ping (your heartbeat) times the number of pings (ignoring the first one), and add to that the time delay to the first ping (your minimum visit length). The formula is:

and the below shows an example visually for a single page view.

At a session level, this calculation is slightly more involved, as it needs to happen per page view and account for stray page pings, but the underlying idea is the same.

Optional Modules

Consent Tracking Custom Module

This custom module is built as an extension of the dbt-snowplow-web package, it transforms raw consent_preferences and cmp_visible event data into derived tables for easier querying. These events are generated by the Enhanced Consent plugin of the JavaScript tracker.

For the incremental logic to work within the module you must use at least RDB Loader v4.0.0, as the custom module relies on the additional load_tstamp field for dbt native incrementalisation.

Whenever a new consent version is added to be tracked, the model expects an allow_all event in order to attribute the events to the full list of latest consent scopes. It is advisable to send a test event of that kind straight after deployment so that the model can process the data accurately.

To enable this optional module, the web package must be correctly configured. Please refer to the snowplow-web dbt quickstart guide for a full breakdown of how to set it up.

Overview

This custom module consists of a series of dbt models which produce the following aggregated models from the raw consent tracking events:

snowplow_web_consent_log: Snowplow incremental table showing the audit trail of consent and Consent Management Platform (cmp) eventssnowplow_web_consent_users: Incremental table of user consent tracking statssnowplow_web_consent_totals: Summary of the latest consent status, per consent versionsnowplow_web_consent_scope_status: Aggregate of current number of users consented to each consent scopesnowplow_web_cmp_stats: Used for modeling cmp_visible events and related metricssnowplow_web_consent_versions: Incremental table used to keep track of each consent version and its validity

Operation

It is assumed that the dbt_snowplow_web package is already installed and configured as per the Quick Start instructions.

Enable the module

To enable the custom module simply copy the following code snippet to your own dbt_project.yml file:

# dbt_project.yml

models:

snowplow_web:

optional_modules:

consent:

enabled: true

+schema: "derived"

+tags: ["snowplow_web_incremental", "derived"]

scratch:

+schema: "scratch"

+tags: "scratch"

bigquery:

enabled: "{{ target.type == 'bigquery' | as_bool() }}"

databricks:

enabled: "{{ target.type in ['databricks', 'spark'] | as_bool() }}"

default:

enabled: "{{ target.type in ['redshift', 'postgres'] | as_bool() }}"

snowflake:

enabled: "{{ target.type == 'snowflake' | as_bool() }}"

Run the module

If you have previously run the web model without this optional module enabled, you can simply enable the module and run dbt run --selector snowplow_web as many times as needed for this module to catch up with your other data. If you only wish to process this from a specific date, be sure to change your snowplow__start_date, or refer to the Custom module section for a detailed guide on how to achieve this the most efficient way.

If you haven't run the web package before, then you can run it using dbt run --selector snowplow_web either through your CLI, within dbt Cloud, or for Enterprise customers you can use the BDP console. In this situation, all models will start in-sync as no events have been processed.

Stray Page Pings

Stray Page Pings are pings within a session that do not have a corresponding page_view event within the same session. The most common cause of these is someone returning to a tab after their session has timed out but not refreshing the page. The page_view event exists in some other session, but there is no guarantee that both these sessions will be processed in the same run, which could lead to different results. Depending on your site content and user behavior the prevalence of sessions with stray page pings could vary greatly. For example with long-form content we have seen around 10% of all sessions contain only stray page pings (i.e. no page_view events).

We take different approaches to adjust for these stray pings at the page view and sessions levels, which can lead to differences between the two tables, but each is as accurate as we can currently make it.

Sessions

As all our processing ensures full sessions are reprocessed, our sessions level table includes all stray page ping events, as well as all other view and ping events. We adjust the start time down based on your minimum visit length if the session starts with a page ping, and we include sessions that contain only (stray) pings. We also count page views based on the number of unique page_view_ids you have (from the web_page context) rather than using absolute page_view events to include these stray pings, and account for stray pings in the engaged time. Overall this is a more accurate view of a session and treats the stray pings as if they had a corresponding page_view event in the same session, even when they did not.

The result of this is you may see misalignment between sessions and if you tried to recalculate them based directly off the page views table; this is because we discard stray pings during page view processing as discussed below, so the values (page_views, engaged_time_in_s, and absolute_time_in_s) in the sessions table may be higher, but are more accurate at a session level.

Page Views

For page views, because we cannot guarantee the sessions with the page_view event and all subsequent page_ping events are processed within the same run, we choose to discard all stray page pings. Without doing this it could be possible that you would get different results from different run configurations.

Currently we do not process these discarded stray page pings in any way, meaning that engaged time and scroll depth in these cases may be under representative of the true value. Due to session level reprocessing this remains a complicated issue to resolve, but please let us know if you would like to help solve this!