The Snowplow Collector on AWS

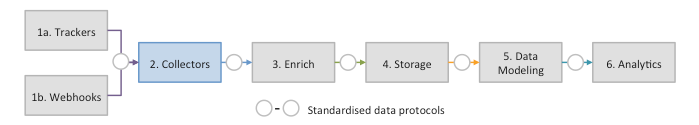

On a AWS pipeline, the Snowplow Stream Collector receives events sent over HTTP(S), and writes them to a raw Kinesis stream. From there, the data is picked up and processed by the Snowplow validation and enrichment job.

On a AWS pipeline the basic steps are:

- In the AWS console, create two Kinesis streams to which the collector will write good payloads and bad events.

- (Optional) Set up an SQS buffer to handle spikes in traffic.

- Configure and run the collector, using the main collector documentation, which describes the core concepts of how the collector works, and the configuration options.

- (Optional) Configure and run Snowbridge with an SQS source and a Kinesis target to move data from your SQS buffer back to the primary Kinesis queue.

- (Optional) Sink the raw data to S3 using the Snowplow S3 loader. This is recommended in production so keep a copy of the raw data before any processing.