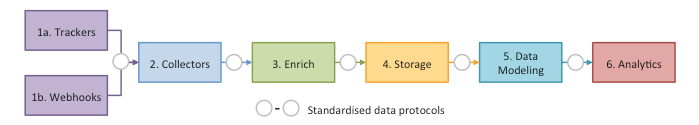

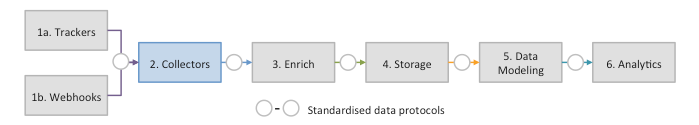

Manual Setup on AWS

Installation Guide

- Setup your AWS environment

- Setup a Snowplow Collector

- Setup one or more sources using trackers or webhooks

- Setup Enrich

- Setup alternative data stores (e.g. Redshift, PostgreSQL)

- Data modeling in Redshift

- Analyze your data!

Setup a Snowplow Collector

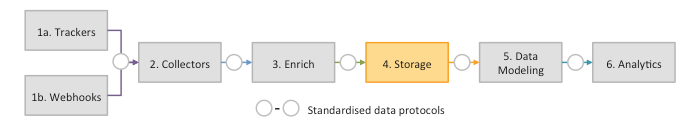

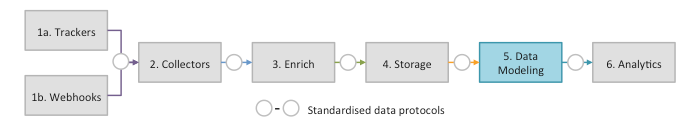

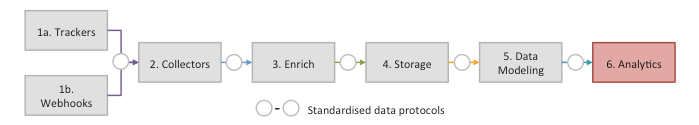

The Snowplow collector receives data from Snowplow trackers and webhooks, and writes them to a stream for further processing. Setting up a collector is the first step in the Snowplow setup process.

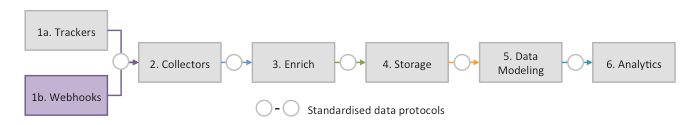

Setup a Data Source

Snowplow supports two types of data sources: trackers (SDKs) for integrating your own apps, and webhooks for ingesting data from third parties via HTTP(S).

Setup a Snowplow Tracker

![]()

Snowplow trackers generate event data and send that data to Snowplow collectors to be captured. The most popular Snowplow tracker to-date is the JavaScript Tracker, which is integrated in websites (either directly or via a tag management solution) the same way that any web analytics tracker (e.g. Google Analytics or Omniture tags) is integrated.

Setup a Third-Party Webhook

Snowplow allows you to collect events via the webhooks of supported third-party software.

Webhooks allow this third-party software to send their own internal event streams to Snowplow collectors to be captured. Webhooks are sometimes referred to as "streaming APIs" or "HTTP response APIs".

Note: once you have setup a collector and tracker or webhook, you can pause and perform the remainder of the setup steps later. That is because your data is being successfully generated and logged. When you eventually setup enrich, you will be able to process all the data you have logged since setup.

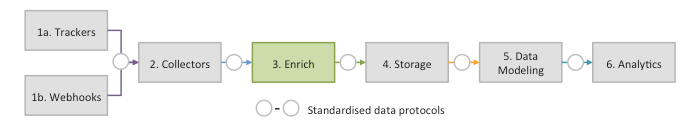

Setup Enrich

The Snowplow enrichment process processes raw events from a collector and

- Cleans up the data into a format that is easier to parse / analyze

- Enriches the data (e.g. infers the location of the visitor from his / her IP address and infers the search engine keywords from the query string)

- Stores the cleaned, enriched data

Once you have setup Enrich, the process for taking the raw data generated by the collector, cleaning and enriching it will be automated.

- Setup Enrich now

Setup alternative data stores (e.g. Redshift, Snowflake, Elastic)

Most Snowplow users store their web event data in at least two places: S3 for processing in Spark (e.g. to enable machine learning via MLLib) and a database (e.g. Redshift) for more traditional OLAP analysis.

The RDB Loader is an EMR step to regularly transfer data from S3 into other databases e.g. Redshift. If you only wish to process your data using Spark on EMR, you do not need to setup the RDB Loader. However, if you would find it convenient to have your data in another data store (e.g. Redshift) then you can set this up at this stage.

Data modeling

Once your data is stored in S3 and Redshift, the basic setup is complete. You now have access to the event stream: a long list of packets of data, where each packet represents a single event. While it is possible to do analysis directly on this event stream, it is common to:

- Join event-level data with other data sets (e.g. customer data)

- Aggregate event-level data into smaller data sets (e.g. sessions)

- Apply business logic (e.g. user segmentation)

We call this process data modeling.

Analyze your data!

Now that data is stored in S3 and potentially also Redshift, you are in a position to start analyzing the event stream or data from the derived tables in Redshift, if a data model has been built. As part of the setup guide we run through the steps necessary to perform some initial analysis and plugin a couple of analytics tools, to get you started.

You now have all six Snowplow subsystems working! The Snowplow setup is complete!