What is deployed?

Let’s take a look at what's deployed on GCP upon running the quick start example script.

Note: you can very easily edit the script by removing certain modules, giving you the flexibility to design the topology of your pipeline according to your needs.

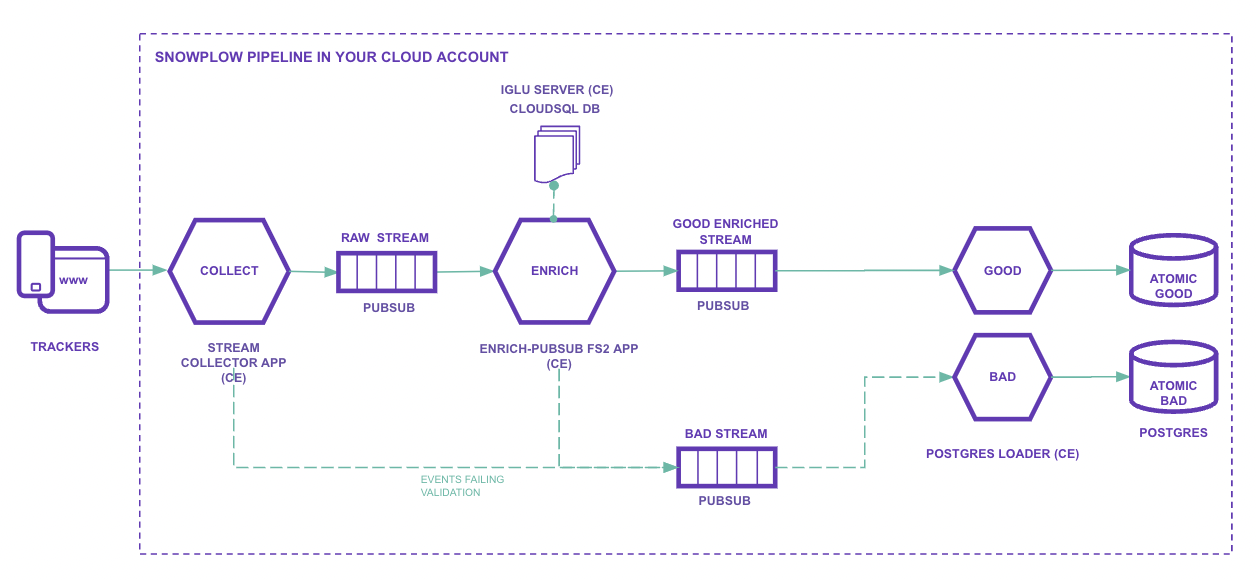

- Postgres

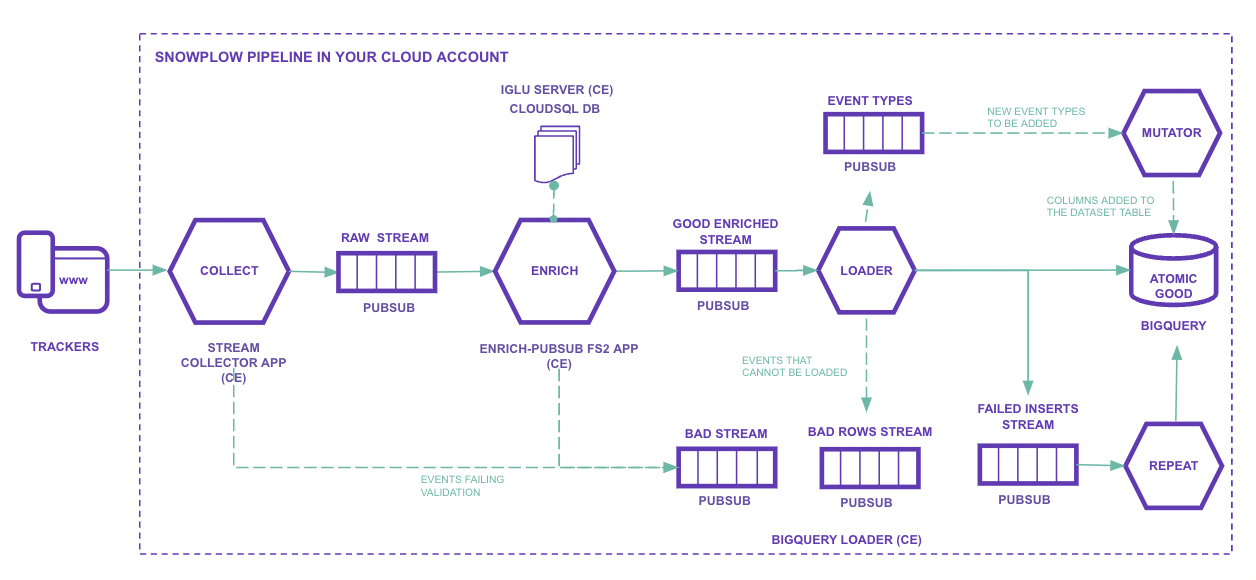

- BigQuery

Collector load balancer

This is an application load balancer for your inbound HTTP/S traffic. Traffic is routed from the load balancer to the collector.

For further details on the resources, default and required input variables, and outputs see the terraform-google-lb terraform module.

Stream Collector

This is a Snowplow event collector that receives raw Snowplow events over HTTP, serializes them to a Thrift record format, and then writes them to pubsub. More details can be found here.

For further details on the resources, default and required input variables, and outputs see the collector-pubsub-ce terraform module.

Stream Enrich

This is a Snowplow app written in scala which:

- Reads raw Snowplow events off a Pubsub topic populated by the Scala Stream Collector

- Validates each raw event

- Enriches each event (e.g. infers the location of the user from his/her IP address)

- Writes the enriched Snowplow event to the enriched topic

It is designed to be used downstream of the Scala Stream Collector. More details can be found here.

For further details on the resources, default and required input variables, and outputs see the enrich-pubsub-ce terraform module.

Pubsub topics

Your pubsub topics are a key component of ensuring a non-lossy pipeline, providing crucial back-up, as well as serving as a mechanism to drive real time use cases from the enriched stream.

For further details on the resources, default and required input variables, and outputs see the pubsub-topic terraform module.

Raw stream

Collector payloads are written to this raw pubsub topic, before being picked up by the Enrich application.

Enriched topic

Events that have been validated and enriched by the Enrich application are written to this enriched stream.

Bad 1 topic

This bad topic is for events that the collector or enrich fail to process. An event can fail at the collector point due to, for instance, it being too large for the stream creating a size violation bad row, or it can fail during enrichment due to a schema violation or enrichment failure. More details can be found here.

- Postgres

- BigQuery

No other pubsub topics.

Bad Rows topic

This bad topic contains events that could not be inserted into BigQuery by the loader. This includes data is not valid against its schema or is somehow corrupted in a way that the loader cannot handle.

BigQuery datasets

There will be one new dataset available with the suffix _snowplow_db. Within which there will be a table called events - all of your collected events will be available here generally within a few seconds after they are sent into the pipeline.

GCS buckets

For any data that cannot be loaded at all we have deployed a dead letter bucket which will have the suffix -bq-loader-dead-letter. All events that are re-tried by the repeater and fail to be inserted into BigQuery end up here.

Iglu

Iglu allows you to publish, test and serve schemas via an easy-to-use RESTful interface. It is split into a few services.

Iglu load balancer

This load balances the inbound traffic and routes traffic to the Iglu Server.

For further details on the resources, default and required input variables, and outputs see the google-lb terraform module.

Iglu Server

The Iglu Server serves requests for Iglu schemas stored in your schema registry.

For further details on the resources, default and required input variables, and outputs see the iglu-server-ce terraform module.

Iglu CloudSQL

This is the Iglu Server database where the Iglu schemas themselves are stored.

For further details on the resources, default and required input variables, and outputs see the cloud-sql terraform module.

- Postgres

- BigQuery

Postgres loader

The Snowplow application responsible for reading the enriched and bad data and loading to Postgres.

For further details on the resources, default and required input variables, and outputs see the postgres-loader-pubsub-ce terraform module.

BigQuery loader

The Snowplow application responsible for reading the enriched data and loading to BigQuery.

For further details on the resources, default and required input variables, and outputs see the bigquery-loader-pubsub-ce terraform module.

Next, start tracking events from your own application.